Microscopic assembly line robots are the most beautiful new thing I’ve seen all month

And now… your moment of Zen.

And now… your moment of Zen.

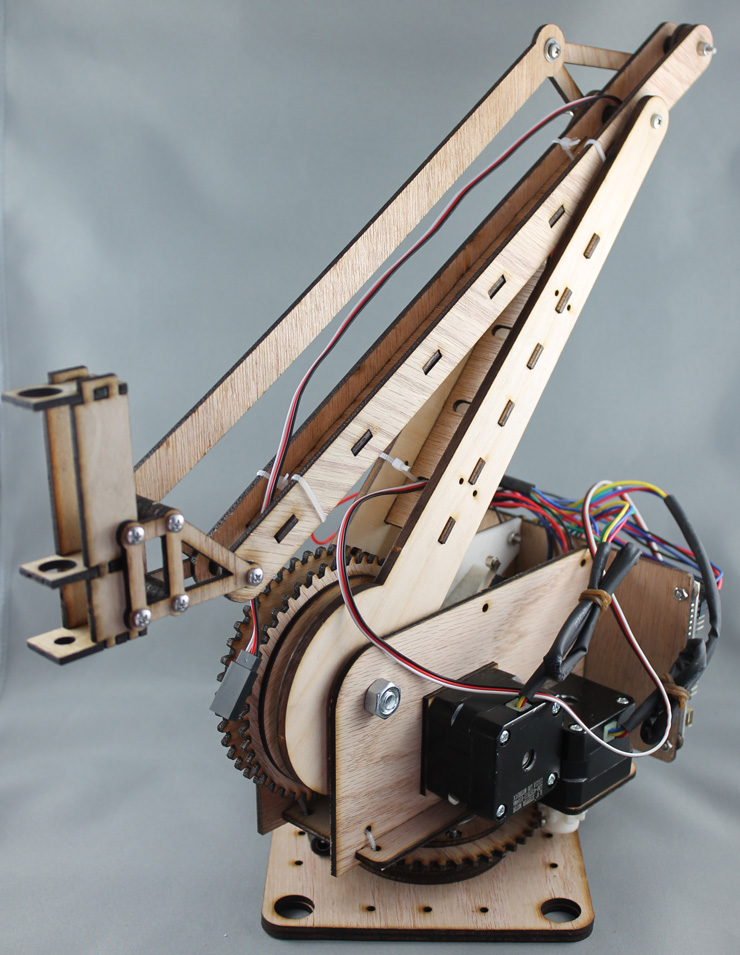

I’ve just added a dimension drawing of the base for the Arm3 robot to the ROBO-0023 Arm3 product page. This way you should be able to integrate it into your automation solution more easily. I’ve also updated the description with a special section that covers the technical abilities of the Arm3.

Reddit.com robotics user newgenome heard about my project and sent in this video of self-assembling FANUC robots arms. SFW automation porn fiesta commences now:

So it seems I’m not the first to achieve this goal… but I can still be the first in the open source community. Opportunity Awaits!

I’ve just updated the software for the Arm3 project. Everything between here and the San Mateo Maker faire is about putting my best foot forward. I’ve just rearranged the office to make a workspace for the arms. Now I have to lay out the components and teach them to assemble one of their own. I wonder how many tries it will take? You’ll have a chance to try it yourself at the faire.

The next step for me is to record and playback actions for several robots working in concert. This way the various stages of assembly can be tackled in sections.

I also rewrote the HTML and CSS for the site so that our facebook fans will no longer get the paypal logo where there should be a relevant robot picture.

Anyways, on with the software update.

I hope this program will be reused in the coming months to simulate all Marginally Clever robots.

– OpenGL graphics that simulate arms

– Synchronizes real arm(s) and simulation

– WASDQE+mouse camera controls

– UIOJKL arm movement w/ IK solving (moves along cartesian grid)

– RTYFGH arm movement w/o IK solving (moves motors only)

– Spacebar to switch between arms

– added EEPROM version and GUID records

Arm3 robot trainer is coming along. There were several false starts until I figured out the right way to get Swing and OpenGL to play nice together in Java.

In this proof-of-concept the real machine moves to match the virtual model. The robot already understands Inverse Kinematics so there are two ways to drive the machine: the first is to move along XYZ lines and the IK system figures out how to match your request. The second is to move the motors directly. A trainer can use either one or both at once.

Next step is to record & play back sessions. Once that’s been achieved people can share training sessions with each other online – remix, tweak, collaborate, use github, and so on.

I’m looking for resellers and people who are interested in using this robot to solving real world problems. I’m also getting ready for the San Mateo Maker Faire & MakerCon. Will I see you there? We should meet up and talk shop.